SCCM 2012 Data Replication Service

(DRS)

Data replication Service came as a new feature for SCCM 2012

for the replication between the sites along with the legacy file based

replication that was used in the earlier versions. Sometimes people refer it as

a SQL replication which is incorrect as ‘SQL replication’ is a standalone SQL

feature and SCCM 2012 is designed not to

use it. Though it would be correct to say the replication is SQL based as we

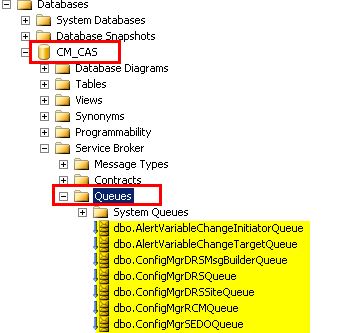

use SQL features like SQL Service Broker (SSB) and Change Tracking along with

Bulk copy program (BCP). The component is partly written in SQL and partly in

ConfigMgr (known as Replication Configuration Monitor- RCM).

Advantages of DRS implementation in

SCCM 2012

SCCM 2007 was designed with multiple tiers in the

hierarchy

One of the pain areas

of the SCCM 2007 was that we could design multiple tiers in the hierarchy and a

big issue generators being ‘Package status for a third/fourth tier site not

being yet reported to the Central console. Stuck in Install pending state’.

Generally in such cases if any one of the tiers in between are stuck in sending

huge packages then the status message being the lowest priority was delayed and

with many packages around it used be a tedious task troubleshooting such issues

which used to get resolved after days automatically (once the sending was

completed)

With SCCM 2012, there is

hierarchy limitation of a single primary site under CAS which ultimately can

have secondary’s under it. So it is better

to say the hierarchy was flattened for the better. Also, there was definitely a

huge need in the differentiation between -

a. Data (Status messages, inventory,

Collection changes, package changes, site wide changes)

b. Content (The actual source files for

the packages and applications)

So, SCCM 2012 went ahead

in prioritizing the data as a SQL based replication which is known as DRS to be

independent way of replication than the content which was still kept as a

legacy file based replication.

Redundancy in data processing with SCCM 2007.

Consider an inventory

for a client from the tier 3 site. This will process there and then will move

up in the hierarchy via the legacy replication. So in all processing in each of

the upper tiers plus the bandwidth wastage in sending.

With ConfigMgr 2012,

we don’t have the multi-tier primary hierarchies and the data is only processed

on the reporting primary site and replicated to the CAS via DRS.

What do DRS actually replicate?

a. Global data: The data which is common

across the hierarchy. It is shared between CAS and all the primaries. So what

comes in Global data? I would say the best way to remember this is by

answering the questions ‘What an admin can create?’ viz. Packages,

collections, applications etc. Now, as I mentioned that this common across the

hierarchy this was not the case in ConfigMgr 2007. A package created on a Tier

2 site will only move down and not up.

A derivation of the Global data is the ‘Global Proxy’

data which is shared across the primaries and their respective secondaries.

Also, a design change in ConfigMgr 2012 is secondary now will have a database.

Reason is clear as we have moved to DRS we will need SQL DB to there for the

packages and other stuff data replication.

b. Site data: The data which comes from

the client can be termed as site data. The client directly reports to the

corresponding primary and this becomes the site data. The data is shared

between the CAS and the respective primaries. Meaning- Primary1 will not have

the data of Primary 2 but CAS will have the data of both the primaries.

How do DRS differentiate Global data and Site data?

Data is broken into global data and site data, and then

it is further divided them into categories known as ‘Replication groups’ for

better management.

In simple words, a replication group is nothing but a

groups of tables. SCCM 2012 have around 12 replication groups in global data.

The division is simply on the basis of the type. E.g. Alert tables combined to

form ‘Alerts’ group. Configuration information combined to form a group

‘Configuration Data’.

Similarly, site data is divided into replication groups.

Few important ones are Hardware_inventory_* (* is a number starting from 0 and

this can grow depending on the magnitude and types of H/W inventory information

collected.) Software Metering is one of the other ones.

There is one more thing which is ‘Replication pattern’

which is nothing but the type of data viz. Global, site or Global proxy.

How to query the replication

group that belong to a data?

For Global Data –

select

* from vReplicationData where Replicationpattern = 'global'

For Site Data –

select * from vReplicationData where Replicationpattern =

'site'

Below is the Screenshot of the first query – Global data

Now once we know the groups, we can also find which tables

is actually a part of a replication group. For example if we want to find the

tables for the ‘Alerts’ group.

select ArticleName from ArticleData where ReplicationID =

(select ID from vReplicationData where ReplicationGroup = 'Alerts')

Assume a requirement in which we want to find all

hardware inventory related tables then the query could be like –

select ArticleName from ArticleData where ReplicationID

in (select ID from vReplicationData where ReplicationGroup like

'Hardware_Inventory%')

Now that we have a bit of background of DRS, let’s move

to how the replication is fulfilled through DRS :

This can be broken down to two parts –

a. Site

Initialization – This is the first step where the data gets copied

in bulk to the given primary site. Taking a scenario of a new Primary site

creation it needs all the global data from the CAS.

The process is simple –

Ø

The

primary asks for the init to the CAS. This transfers to the CAS as a RCM (Replication

Configuration Management/Monitoring) message from the RCM service broker queue.

Ø

CAS once

it gets the message calls the BCP OUT function to copy all the global data from

the database to *.bcp files along with the proper rowcounts (*.rowcount) for

each table.

Ø

This is

then compressed by the RCMCtrl on the CAS and then send to the primary via the

legacy sender.

Ø

The

primary gets the data and then performs the BCP IN to insert the data back into

the SQL database.

What is maintenance mode? What are the different modes a site

can have?

When a site in initializing its

global/site data it goes into maintenance mode. There are two types of

maintenance modes.

1.

Site Maintenance – When

the primary site is being installed it is not usable and is in a Site

maintenance mode. The console is in a read only mode.

2.

Replication Maintenance – The

CAS goes into replication maintenance when it is yet to get the site data from

any of its primaries. During this time the site is usable but it will never

replicate any data to any other sites.

|

SiteStatus

|

Mode

|

||||||||||||||||||||||||||||||||||||

|

|

It is clear that if the

replication groups when not get initialized we will be having the site in

Maintenance mode. When primary does not have the Global data initialized it

will be in Maintenance mode and will not give the site data to CAS keeping it

in maintenance too.

Below are the different modes a replication group can be?

|

0

|

'Unknown'

|

|

1

|

'Required'

|

|

2

|

'Requested'

|

|

3

|

'PendingCreation'

|

|

4

|

'PackageCreated'

|

|

5

|

'PendingApplication'

|

|

6

|

'Active'

|

|

7

|

'Aborted'

|

|

9

|

'Failed'

|

We can check the InitializationStatus for the replication group in the RCM_DRSInitializationTracking table.

b. Site

Active – Once the site has initialized its global data then it is

ready for production and goes Active. So all the activities will now use DRS

replication; as an example – We create a package on the primary. Here the

sequence of activities are –

1. The

provider will write to the corresponding tables for the package (SMSPackages_G

etc) in the primary site.

2. These

tables are actually enabled for the feature ‘SQL Change tracking’. So when the

new data gets inserted into the SMSPackages_G table only the primary key column

information is stored in the Change tracking tables. The point in keeping only

the primary key information is to avoid increasing the size of the change

tracking table. The whole information of the package to be send can be obtained

later by joining Change tracking table to the original SMSPackages_G table on

the primary key column.

What is SQL change tracking? - Change

tracking is to identify and keep track of what changed in the Database like a

row insertion, updation or deletion. We need this as we have to now only send

the changes to the CAS and not the whole global data again as both the CAS and

primary are now in page.

How Change Tracking works in background –

For every table that is enabled

for change tracking we have one more internal table

change_tracking_<object_id >. When a row gets inserted in the actual

table then a corresponding entry (containing) only the primary key information

gets added in the internal table change_tracking_<object_id >. For every

successful transaction that is committed for a table we see a row in the sys.syscommittab table.

Thesys.syscommittab is a system table which can be referenced by

thesys.dm_tran_commit_table view.

Let’s table an example of

SMSPackages_G. How do we find the corresponding internal change tracking table

for the same –

select OBJECT_NAME(OBJECT_ID)

[ObjectName], * from sys.change_tracking_tables where OBJECT_NAME(OBJECT_ID)

like '%smspackages%'

From the object id we will get

the change tracking table for the same –

select name,internal_type_desc,*

from sys.internal_tables where name like '%1675153013%'

Similarly for getting a view of

sys.syscommittab use the below query –

select * from

sys.dm_tran_commit_table

Also, Change Tracking is

something that has to be enabled for the database and also for the tables.

For

finding the change tracking enabled databases

select * from

sys.change_tracking_databases

For

finding the change tracking enabled tables

select * from

sys.change_tracking_tables

3. Converting

the change into a message and then placing it in the corresponding SQL service

broker (SSB) queue. The message will be then passed to the corresponding queue

of the other SSB endpoint by the corresponding SQL broker service.

For

those who don’t know what SQL service broker is, to clarify it is nothing but a

mechanism to deliver messages end to end. So Why SQL SSB? Well there are many

reason as to why it is awesome -

a. It

provides faster transactional ability as it is the part of engine itself.

b. It

provides reliability and enhanced security. Reliability in terms of any message

that goes out the SQL SSB stays in the sys.transmission_queue until it receives

the acknowledgment for the same. Also these queues are not running on Memory

but stored in the form of tables hence never get lost in outages. Security is

brought by the certificates used to encrypt the messages if it’s going to the

endpoint on the other SSB.

c. It

uses asynchronous communication tactic. Meaning, it never polls for the

messages but as and when the messages arrive they invoke the activation

procedure based on the queue on which they arrive. The procedure then takes

care as to how to process this message.